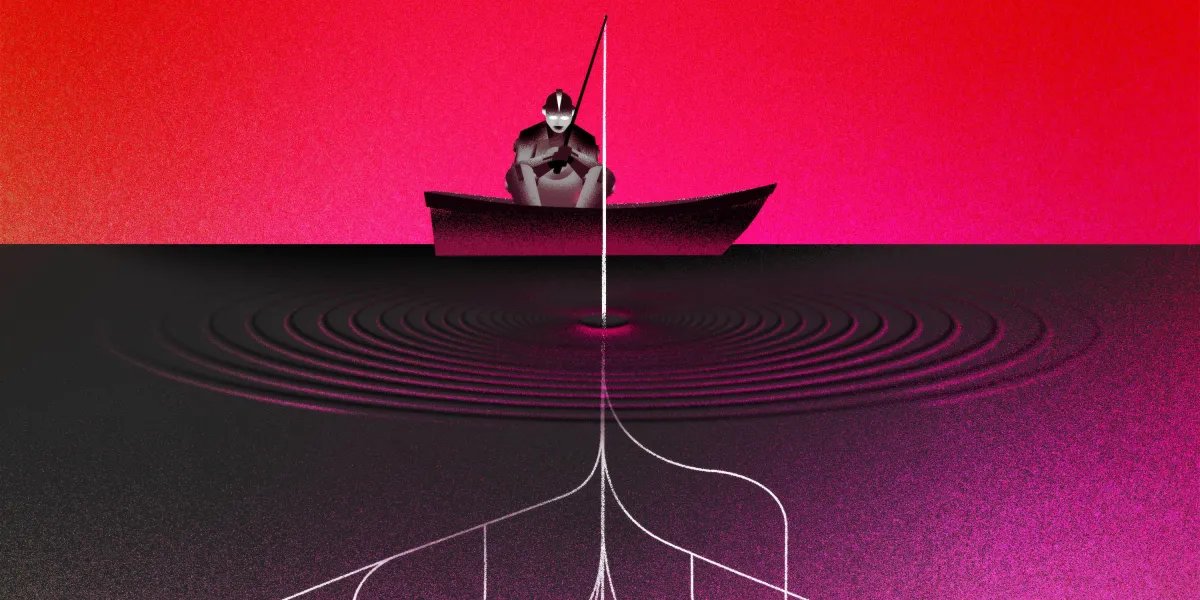

Just as software engineers are using artificial intelligence to help write code and check for bugs, hackers are using these tools to reduce the time and effort required to orchestrate an attack, lowering the barriers for less experienced attackers to try something out.

Some in Silicon Valley warn that AI is on the brink of being able to carry out fully automated attacks. But most security researchers instead argue that we should be paying closer attention to the much more immediate risks posed by AI, which is already speeding up and increasing the volume of scams.

Criminals are increasingly exploiting the latest deepfake technologies to impersonate people and swindle victims out of vast sums of money. And we need to be ready for what comes next. Read the full story.

—Rhiannon Williams

This story is from the next print issue of MIT Technology Review magazine, which is all about crime. If you haven’t already, subscribe now to receive future issues once they land.

Is a secure AI assistant possible?

AI agents are a risky business. Even when stuck inside the chatbox window, LLMs will make mistakes and behave badly. Once they have tools that they can use to interact with the outside world, such as web browsers and email addresses, the consequences of those mistakes become far more serious.

Viral AI agent project OpenClaw, which has made headlines across the world in recent weeks, harnesses existing LLMs to let users create their own bespoke assistants. For some users, this means handing over reams of personal data, from years of emails to the contents of their hard drive. That has security experts thoroughly freaked out.

In response to these concerns, its creator warned that nontechnical people should not use the software. But there’s a clear appetite for what OpenClaw is offering, and any AI companies hoping to get in on the personal assistant business will need to figure out how to build a system that will keep users’ data safe and secure. To do so, they’ll need to borrow approaches from the cutting edge of agent security research. Read the full story.

—Grace Huckins