At this point, it’s safe to assume you’ve used a chatbot like ChatGPT or Gemini. Besides asking general questions or getting long texts summarized, you might have asked a health question, too. Maybe you were trying to figure out if a symptom was worth worrying about, or make sense of lab results, often late at night when a doctor isn’t available.

OpenAI’s January 2026 report found that more than 5% of all ChatGPT messages globally are about health care, and more than 40 million weekly active users worldwide ask health care questions every day.

(Disclosure: Ziff Davis, CNET’s parent company, in 2025 filed a lawsuit against OpenAI, alleging it infringed Ziff Davis copyrights in training and operating its AI systems.)

In January 2026, OpenAI introduced ChatGPT Health to turn that habit into a dedicated feature. This “health-focused experience” inside ChatGPT is designed to help you understand medical information and prepare for real conversations with clinicians.

It is not a diagnosis or treatment tool.

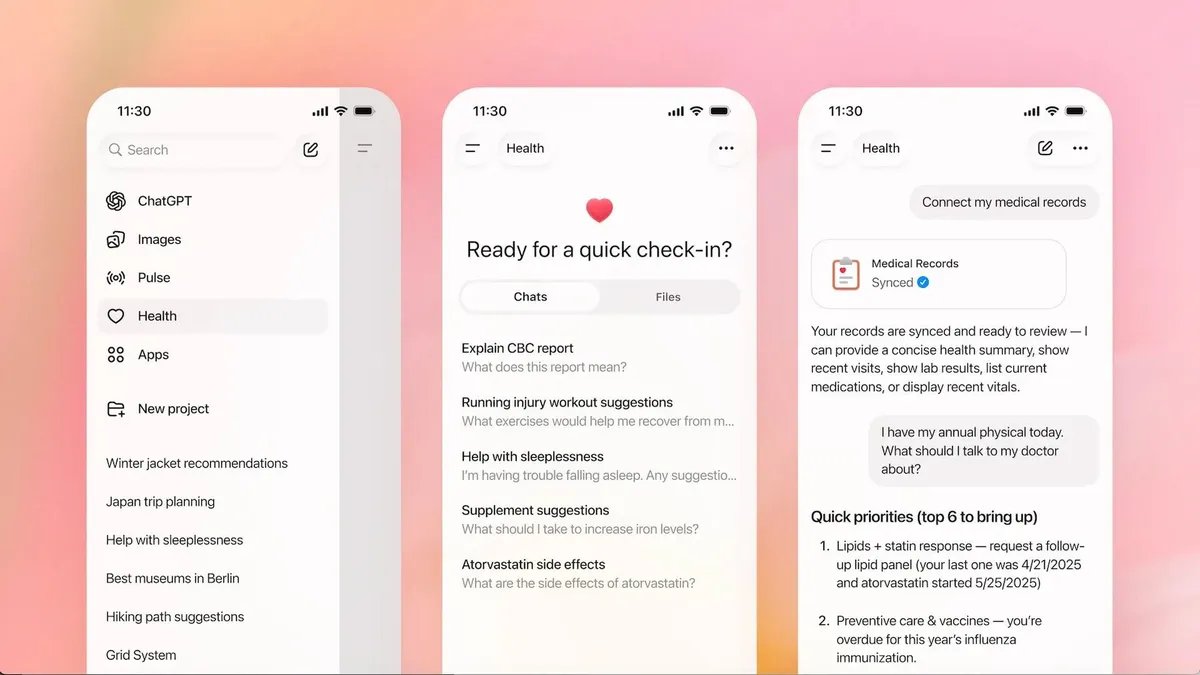

So what exactly is ChatGPT Health, and how does it differ from asking a chatbot a random health question? Let’s double-click the new Health tab in ChatGPT.

What is ChatGPT Health?

ChatGPT Health isn’t a separate app like its AI browser, Atlas. It lives inside ChatGPT as a dedicated space or tab, focusing on health-related questions, documents and workflows.

The Health tab in ChatGPT.

In its release note, OpenAI says it worked with more than 260 physicians who have practiced in 60 countries and dozens of specialties over two years, reviewing health-related model responses more than 600,000 times. As a result, it doesn’t answer anything that sounds medical in the same open-ended way as a normal chat. Instead, it responds more cautiously, with stricter limits on how information is explained and clearer prompts to seek professional care.

ChatGPT Health is available on the web and in the mobile app. You don’t need to download anything or sign up outside ChatGPT itself. Access depends on location and rollout stage, but you can join the waitlist.

As of early 2026, it is available in the US, Canada, Australia, parts of Asia and Latin America, where ChatGPT already supports health features. It’s not currently available in the EU and the European Economic Area, the UK, China and Russia. OpenAI has said availability will expand, but timelines vary by region due to local regulations and health data rules.

How ChatGPT Health works

ChatGPT Health uses the same underlying large language models (LLMs) as ChatGPT. You ask a question, and the model generates a response. The difference with Health is context, grounding and constraints.

Alex Kotlar, founder of Bystro AI, a genetics-focused LLM platform for health insights, told CNET that OpenAI didn’t build a new foundational health model.

“They haven’t created a model that suddenly understands medical records much better. It’s still ChatGPT, just connected to your medical records,” Kotlar said.

Health draws from the data you choose to sync, but it can’t access it unless you explicitly grant permission. Besides medical records, you can connect apps such as Apple Health, lab results from Function and food logs from MyFitnessPal. You can also link Weight Watchers for GLP-1 meal ideas, Instacart to turn meal plans into shopping lists and Peloton for workout recommendations. This allows the AI to provide personalized insights based on your history, rather than generic advice.

These conversations now follow stricter rules around tone, sourcing and response style, which OpenAI says it tests using its evaluation framework, HealthBench. It uses physician-written rubrics to grade model responses across 5,000 simulated health conversations, applying more than 48,000 specific criteria to assess quality and safety.

You can upload documents to Health, use voice command and do everything you’d normally do in regular chat. If you’re reviewing multiple test results or prepping for a specialist visit, Health can keep track of what you have already shared and help organize information over time.

OpenAI suggests using it to review lab results, organize questions before an appointment, translate medical language into plain English and summarize long documents like discharge notes or insurance explanations.

OpenAI is explicit that the tool is meant to support conversations with health care professionals, not shortcut them. It can’t order tests, prescribe medication or confirm a diagnosis. If you treat it like a doctor, you’re using it incorrectly.

But Dr. Saurabh Gombar, clinical instructor at Stanford Health Care and chief medical officer at Atropos Health, told CNET, “I think preparing and education itself actually already crosses a boundary into being medical advice.”

Health keeps conversations, connected apps, files and Health-specific memory separated from your main chats, so health details do not flow back into the rest of ChatGPT. However, Health can use memory from regular chats. Say you mentioned a recent move or lifestyle change, like becoming vegan. Health can draw on that context to make the conversation more relevant.

It can also track patterns over time. If you connect Apple Health, you can ask about sleep trends, activity patterns or other metrics, then use that summary to talk to your doctor.

ChatGPT Health and ChatGPT for health care are not the same

ChatGPT Health is a consumer feature for personal wellness. OpenAI’s Help Center says HIPAA doesn’t apply to consumer health products like Health. HIPAA is the Health Insurance Portability and Accountability Act, which federally protects a patient’s sensitive health information from being disclosed without their consent.

Separately, OpenAI offers “ChatGPT for Healthcare” for organizations that need controls designed for regulated clinical use and support for HIPAA compliance, including Business Associate Agreements (e.g., contracts between health care providers and billing companies).

If you’re using ChatGPT Health, you’re not entering a hospital system, even though your medical records can be connected with the feature. You’re using a consumer product with additional protections that OpenAI controls. So don’t assume “health feature” automatically equals HIPAA.

Privacy and data controls

OpenAI says Health adds extra protections on top of ChatGPT’s existing controls, including “purpose-built encryption and isolation to keep health conversations protected and compartmentalized.”

An OpenAI spokesperson told CNET that conversations and files in ChatGPT are encrypted at rest and in transit by default, and that Health adds additional layered protections due to the sensitive nature of health data. The spokesperson added that any employee access to your Health data would be limited to safety and security operations, and that access is more restricted and purpose-limited than typical product data flows.

“When consumers hear that something is encrypted, they often think that nobody can see it. That’s not really how it works. Encrypted at rest doesn’t mean the company itself can’t access the data,” Kotlar said.

You can disconnect apps, remove access to medical records and delete Health memories. Dane Stuckey, OpenAI’s chief information security officer, also says Health conversations are not used to train its foundation models by default.

Still, “more protected than regular chats” is not the same as risk-free. Even strong security can’t eliminate every risk tied to storing sensitive health information online. That’s one reason privacy experts have urged users to think carefully before uploading full medical records into any AI tool.

Limitations and safety concerns

ChatGPT Health can help you understand information, but it can still get things wrong. There’s a risk people will take Health’s answers at face value. In today’s world, everyone wants answers fast, and AI can sound convincing even when it should be cautious. Unsurprisingly, ECRI (a patient safety nonprofit) listed AI chatbots as the No. 1 health technology standard for 2026.

Hallucinations, the AI habit of confidently producing incorrect details, matter far more in health care than when you ask it to summarize a PDF. If a tool completely fabricates a study, misreads a lab value or overstates what a symptom means, you could be seriously endangering your health.

“The biggest danger for consumers is that unless they have a medical background, they’re going to have a hard time evaluating when it’s saying something right and when it’s saying something wrong,” Kotlar said.

When asked to comment on hallucination rates, the OpenAI spokesperson said the models powering ChatGPT Health have “dramatically reduced” hallucinations and other high-risk errors in challenging medical conversations. According to OpenAI’s internal HealthBench evaluations, GPT-5 reduces hallucinations in difficult health scenarios by eightfold compared with earlier models, cuts errors in potentially urgent situations by more than 50 times compared with GPT-4o and shows no detected failures in adjusting for global health context.

The company also says its newer models are significantly more likely to ask follow-up questions when uncertain, which it argues lowers the risk of confident but incorrect responses.

“Companies behind these tools need to share that they have put these sorts of checks and balances in place or these benchmarks to ensure that the quality of the answer is high,” Gombar tells CNET.

OpenAI says Health is not intended for diagnosis or treatment and should support, not replace, clinicians’ care.

Kotlar says health care is complex and highly regulated, so tools like this can be “great, but also sort of irresponsible at the same time,” since a lot can still go wrong.

Should you use it?

People were already using ChatGPT for health-related questions even when it wasn’t the right place to do it. Because Health adds tighter guardrails for medical topics, it may be slightly safer than a standard chat for that purpose. Even physicians are using AI more in practice, and that use has nearly doubled between 2023 and 2024, according to the American Medical Association, which surveyed just over 1,100 physicians.

Gombar said interactions between traditional health care and AI are likely here to stay, but adds, “there’s room for improvement and for benefit.” Many people don’t have reliable access to routine clinical care, and if LLMs can at least help triage whether someone should see a physician, that alone could be meaningful.

If you’re careful with the data you share, it could be useful. You can use Health to translate medical language or draft questions so you don’t freeze in a 10-minute appointment. You can also ask it other low-risk questions about diet and exercise. Just always verify the information with reputable sources or a professional. Never use it to self-diagnose, decide whether to take or stop medication or interpret a serious symptom.

“Health care is not like coding or writing,” Kotlar said. “When it fails, it fails in ways that are really dangerous for a human being.”

ChatGPT Health can help you make sense of information, but it can’t take responsibility for your health. Like most AI tools, its value depends on how well you understand its limits.